2023

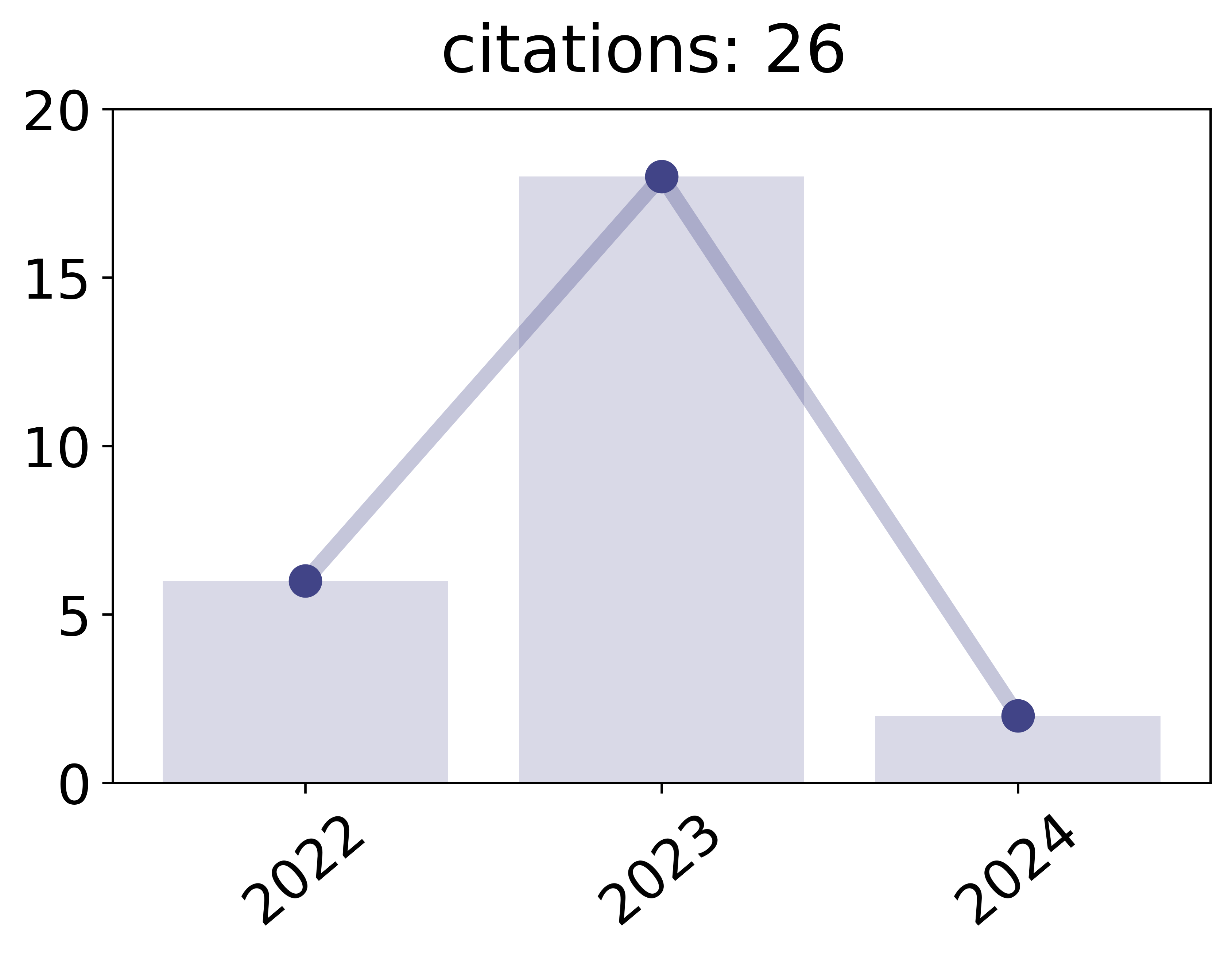

Kamp, Michael; Fischer, Jonas; Vreeken, Jilles

Federated Learning from Small Datasets Proceedings Article

In: International Conference on Learning Representations (ICLR), 2023.

Links | BibTeX | Tags: black-box, black-box parallelization, daisy, daisy-chaining, FedDC, federated learning, small, small datasets

@inproceedings{kamp2023federated,

title = {Federated Learning from Small Datasets},

author = {Michael Kamp and Jonas Fischer and Jilles Vreeken},

url = {https://michaelkamp.org/wp-content/uploads/2022/08/FederatedLearingSmallDatasets.pdf},

year = {2023},

date = {2023-05-01},

urldate = {2023-05-01},

booktitle = {International Conference on Learning Representations (ICLR)},

journal = {arXiv preprint arXiv:2110.03469},

keywords = {black-box, black-box parallelization, daisy, daisy-chaining, FedDC, federated learning, small, small datasets},

pubstate = {published},

tppubtype = {inproceedings}

}

2021

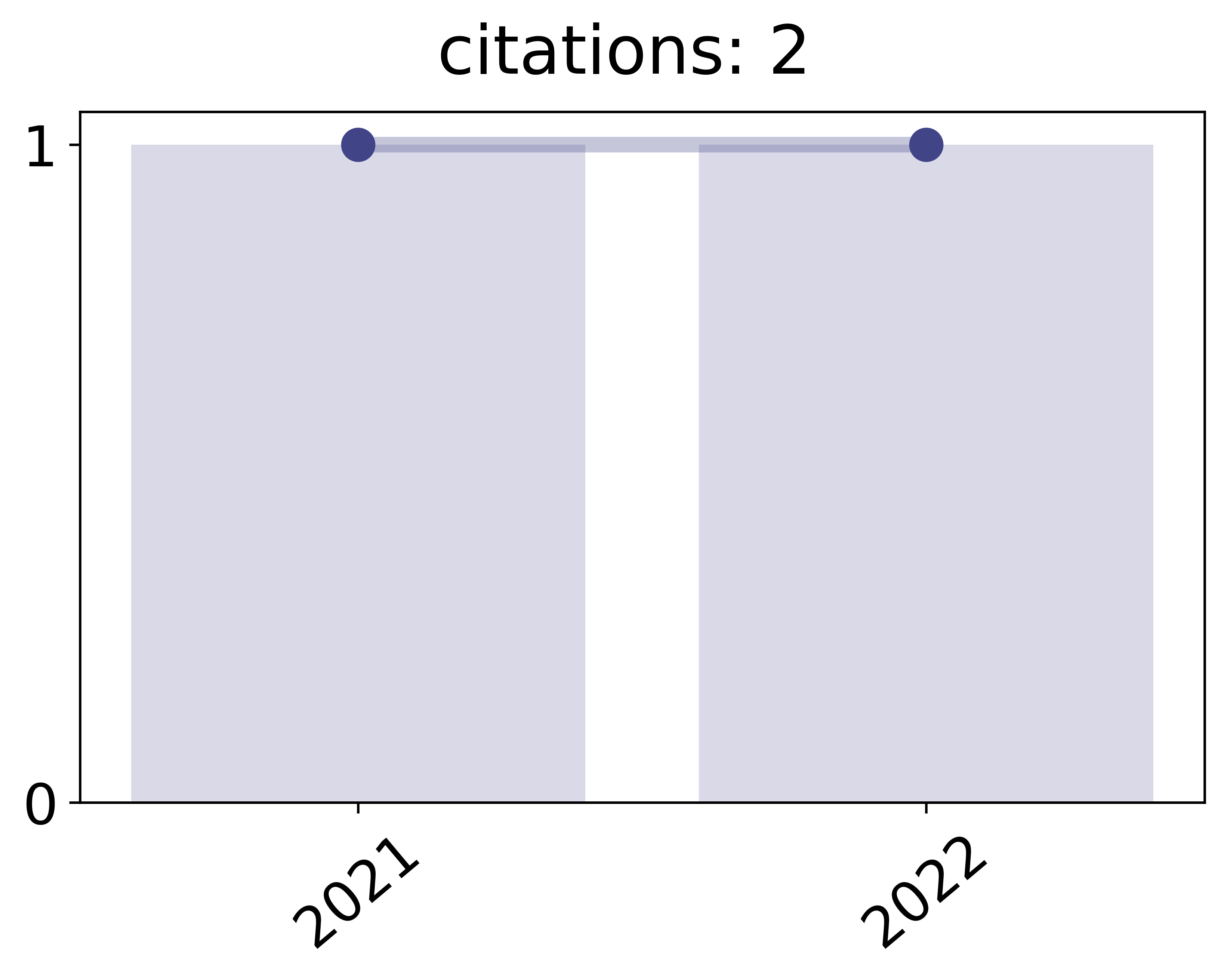

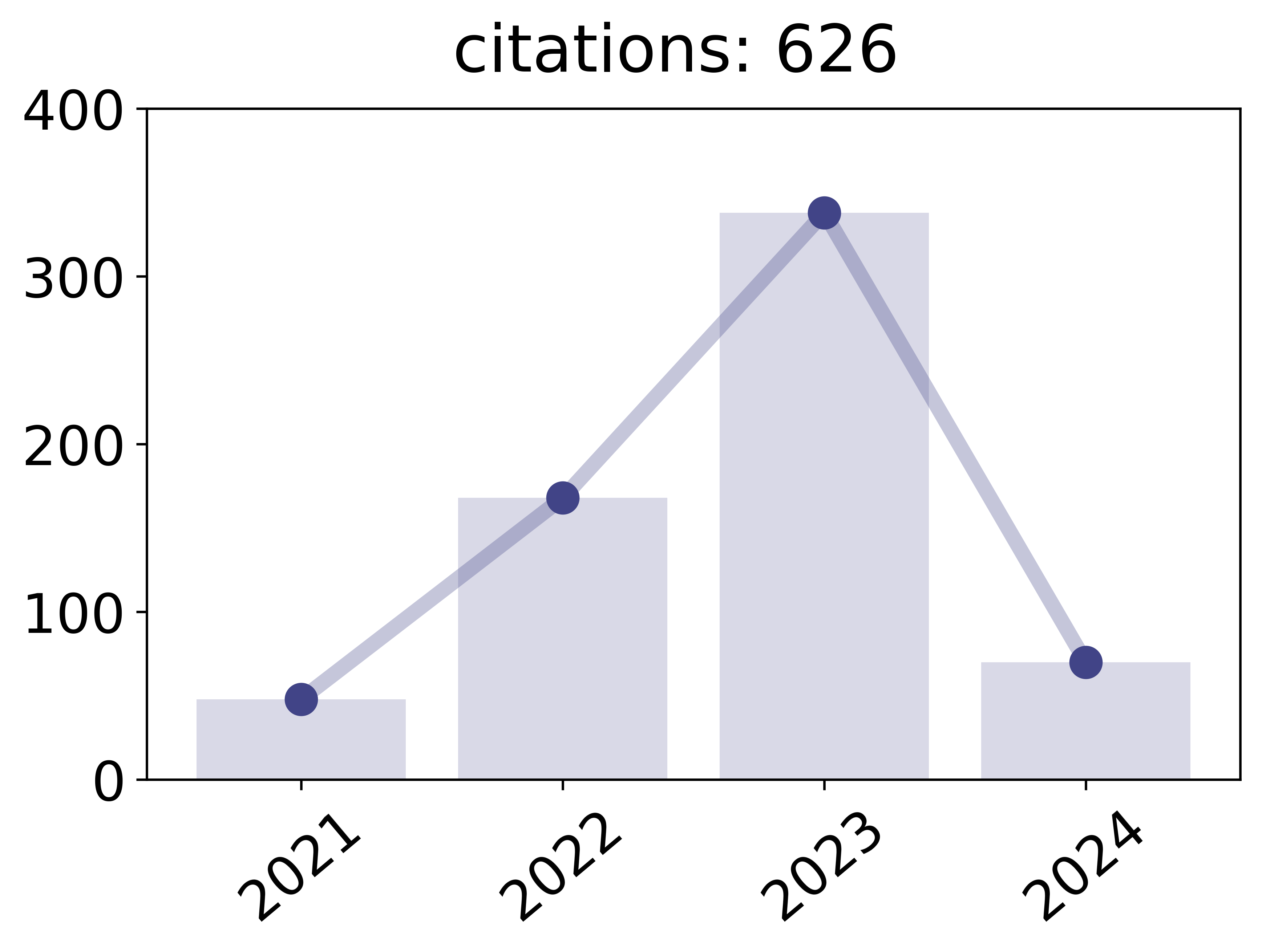

Li, Xiaoxiao; Jiang, Meirui; Zhang, Xiaofei; Kamp, Michael; Dou, Qi

FedBN: Federated Learning on Non-IID Features via Local Batch Normalization Proceedings Article

In: Proceedings of the 9th International Conference on Learning Representations (ICLR), 2021.

Abstract | Links | BibTeX | Tags: batch normalization, black-box parallelization, deep learning, federated learning

@inproceedings{li2021fedbn,

title = {FedBN: Federated Learning on Non-IID Features via Local Batch Normalization},

author = {Xiaoxiao Li and Meirui Jiang and Xiaofei Zhang and Michael Kamp and Qi Dou},

url = {https://michaelkamp.org/wp-content/uploads/2021/05/fedbn_federated_learning_on_non_iid_features_via_local_batch_normalization.pdf

https://michaelkamp.org/wp-content/uploads/2021/05/FedBN_appendix.pdf},

year = {2021},

date = {2021-05-03},

urldate = {2021-05-03},

booktitle = {Proceedings of the 9th International Conference on Learning Representations (ICLR)},

abstract = {The emerging paradigm of federated learning (FL) strives to enable collaborative training of deep models on the network edge without centrally aggregating raw data and hence improving data privacy. In most cases, the assumption of independent and identically distributed samples across local clients does not hold for federated learning setups. Under this setting, neural network training performance may vary significantly according to the data distribution and even hurt training convergence. Most of the previous work has focused on a difference in the distribution of labels or client shifts. Unlike those settings, we address an important problem of FL, e.g., different scanners/sensors in medical imaging, different scenery distribution in autonomous driving (highway vs. city), where local clients store examples with different distributions compared to other clients, which we denote as feature shift non-iid. In this work, we propose an effective method that uses local batch normalization to alleviate the feature shift before averaging models. The resulting scheme, called FedBN, outperforms both classical FedAvg, as well as the state-of-the-art for non-iid data (FedProx) on our extensive experiments. These empirical results are supported by a convergence analysis that shows in a simplified setting that FedBN has a faster convergence rate than FedAvg. Code is available at https://github.com/med-air/FedBN.},

keywords = {batch normalization, black-box parallelization, deep learning, federated learning},

pubstate = {published},

tppubtype = {inproceedings}

}

2020

Heppe, Lukas; Kamp, Michael; Adilova, Linara; Piatkowski, Nico; Heinrich, Danny; Morik, Katharina

Resource-Constrained On-Device Learning by Dynamic Averaging Workshop

Proceedings of the Workshop on Parallel, Distributed, and Federated Learning (PDFL) at ECMLPKDD, 2020.

Abstract | Links | BibTeX | Tags: black-box parallelization, distributed learning, edge computing, embedded, exponential family, FPGA, resource-efficient

@workshop{heppe2020resource,

title = {Resource-Constrained On-Device Learning by Dynamic Averaging},

author = {Lukas Heppe and Michael Kamp and Linara Adilova and Nico Piatkowski and Danny Heinrich and Katharina Morik},

url = {https://michaelkamp.org/wp-content/uploads/2020/10/Resource_Constrained_Federated_Learning-1.pdf},

year = {2020},

date = {2020-09-14},

urldate = {2020-09-14},

booktitle = {Proceedings of the Workshop on Parallel, Distributed, and Federated Learning (PDFL) at ECMLPKDD},

abstract = {The communication between data-generating devices is partially responsible for a growing portion of the world’s power consumption. Thus reducing communication is vital, both, from an economical and an ecological perspective. For machine learning, on-device learning avoids sending raw data, which can reduce communication substantially. Furthermore, not centralizing the data protects privacy-sensitive data. However, most learning algorithms require hardware with high computation power and thus high energy consumption. In contrast, ultra-low-power processors, like FPGAs or micro-controllers, allow for energy-efficient learning of local models. Combined with communication-efficient distributed learning strategies, this reduces the overall energy consumption and enables applications that were yet impossible due to limited energy on local devices. The major challenge is then, that the low-power processors typically only have integer processing capabilities. This paper investigates an approach to communication-efficient on-device learning of integer exponential families that can be executed on low-power processors, is privacy-preserving, and effectively minimizes communication. The empirical evaluation shows that the approach can reach a model quality comparable to a centrally learned regular model with an order of magnitude less communication. Comparing the overall energy consumption, this reduces the required energy for solving the machine learning task by a significant amount.},

keywords = {black-box parallelization, distributed learning, edge computing, embedded, exponential family, FPGA, resource-efficient},

pubstate = {published},

tppubtype = {workshop}

}