2018

Kamp, Michael; Adilova, Linara; Sicking, Joachim; Hüger, Fabian; Schlicht, Peter; Wirtz, Tim; Wrobel, Stefan

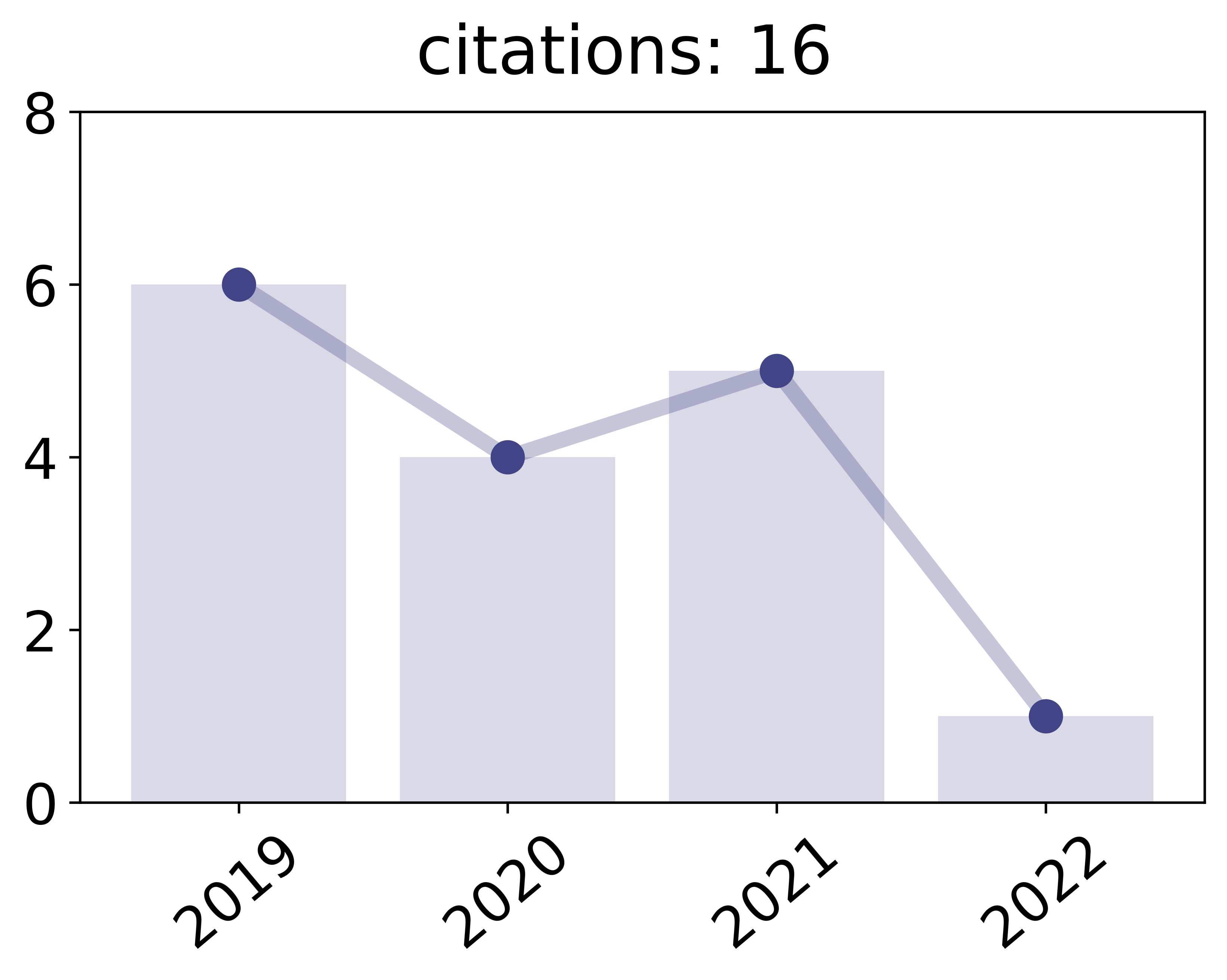

Efficient Decentralized Deep Learning by Dynamic Model Averaging Proceedings Article

In: Machine Learning and Knowledge Discovery in Databases, Springer, 2018.

Abstract | Links | BibTeX | Tags: decentralized, deep learning, federated learning

@inproceedings{kamp2018efficient,

title = {Efficient Decentralized Deep Learning by Dynamic Model Averaging},

author = {Michael Kamp and Linara Adilova and Joachim Sicking and Fabian Hüger and Peter Schlicht and Tim Wirtz and Stefan Wrobel},

url = {http://michaelkamp.org/wp-content/uploads/2018/07/commEffDeepLearning_extended.pdf},

year = {2018},

date = {2018-09-14},

urldate = {2018-09-14},

booktitle = {Machine Learning and Knowledge Discovery in Databases},

publisher = {Springer},

abstract = {We propose an efficient protocol for decentralized training of deep neural networks from distributed data sources. The proposed protocol allows to handle different phases of model training equally well and to quickly adapt to concept drifts. This leads to a reduction of communication by an order of magnitude compared to periodically communicating state-of-the-art approaches. Moreover, we derive a communication bound that scales well with the hardness of the serialized learning problem. The reduction in communication comes at almost no cost, as the predictive performance remains virtually unchanged. Indeed, the proposed protocol retains loss bounds of periodically averaging schemes. An extensive empirical evaluation validates major improvement of the trade-off between model performance and communication which could be beneficial for numerous decentralized learning applications, such as autonomous driving, or voice recognition and image classification on mobile phones.},

keywords = {decentralized, deep learning, federated learning},

pubstate = {published},

tppubtype = {inproceedings}

}

We propose an efficient protocol for decentralized training of deep neural networks from distributed data sources. The proposed protocol allows to handle different phases of model training equally well and to quickly adapt to concept drifts. This leads to a reduction of communication by an order of magnitude compared to periodically communicating state-of-the-art approaches. Moreover, we derive a communication bound that scales well with the hardness of the serialized learning problem. The reduction in communication comes at almost no cost, as the predictive performance remains virtually unchanged. Indeed, the proposed protocol retains loss bounds of periodically averaging schemes. An extensive empirical evaluation validates major improvement of the trade-off between model performance and communication which could be beneficial for numerous decentralized learning applications, such as autonomous driving, or voice recognition and image classification on mobile phones.

2017

Kamp, Michael; Boley, Mario; Missura, Olana; Gärtner, Thomas

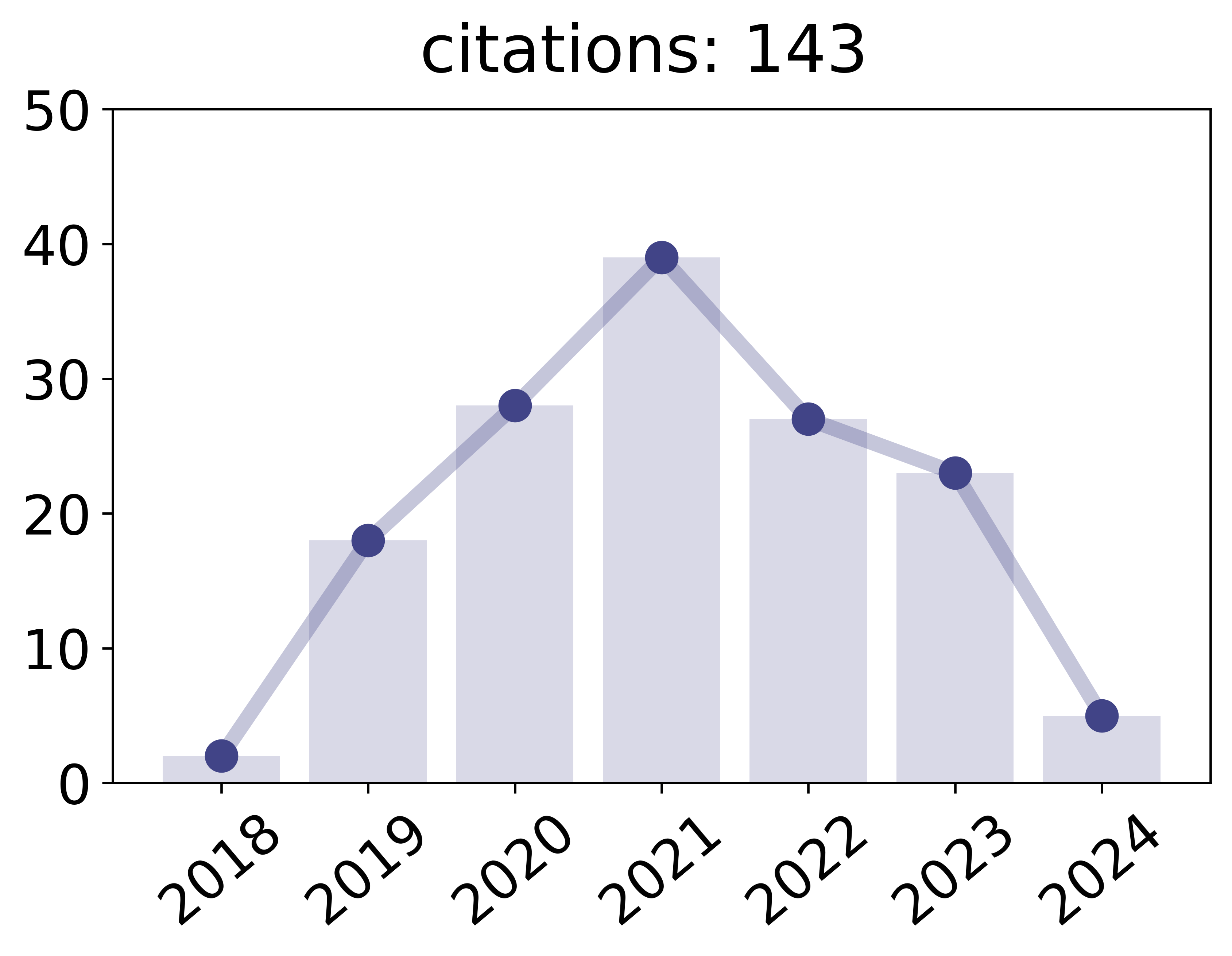

Effective Parallelisation for Machine Learning Proceedings Article

In: Advances in Neural Information Processing Systems, pp. 6480–6491, 2017.

Abstract | Links | BibTeX | Tags: decentralized, distributed, machine learning, parallelization, radon

@inproceedings{kamp2017effective,

title = {Effective Parallelisation for Machine Learning},

author = {Michael Kamp and Mario Boley and Olana Missura and Thomas Gärtner},

url = {http://papers.nips.cc/paper/7226-effective-parallelisation-for-machine-learning.pdf},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {Advances in Neural Information Processing Systems},

pages = {6480--6491},

abstract = {We present a novel parallelisation scheme that simplifies the adaptation of learning algorithms to growing amounts of data as well as growing needs for accurate and confident predictions in critical applications. In contrast to other parallelisation techniques, it can be applied to a broad class of learning algorithms without further mathematical derivations and without writing dedicated code, while at the same time maintaining theoretical performance guarantees. Moreover, our parallelisation scheme is able to reduce the runtime of many learning algorithms to polylogarithmic time on quasi-polynomially many processing units. This is a significant step towards a general answer to an open question on efficient parallelisation of machine learning algorithms in the sense of Nick's Class (NC). The cost of this parallelisation is in the form of a larger sample complexity. Our empirical study confirms the potential of our parallelisation scheme with fixed numbers of processors and instances in realistic application scenarios.},

keywords = {decentralized, distributed, machine learning, parallelization, radon},

pubstate = {published},

tppubtype = {inproceedings}

}

We present a novel parallelisation scheme that simplifies the adaptation of learning algorithms to growing amounts of data as well as growing needs for accurate and confident predictions in critical applications. In contrast to other parallelisation techniques, it can be applied to a broad class of learning algorithms without further mathematical derivations and without writing dedicated code, while at the same time maintaining theoretical performance guarantees. Moreover, our parallelisation scheme is able to reduce the runtime of many learning algorithms to polylogarithmic time on quasi-polynomially many processing units. This is a significant step towards a general answer to an open question on efficient parallelisation of machine learning algorithms in the sense of Nick's Class (NC). The cost of this parallelisation is in the form of a larger sample complexity. Our empirical study confirms the potential of our parallelisation scheme with fixed numbers of processors and instances in realistic application scenarios.