2024

Yang, Fan; Bodic, Pierre Le; Kamp, Michael; Boley, Mario

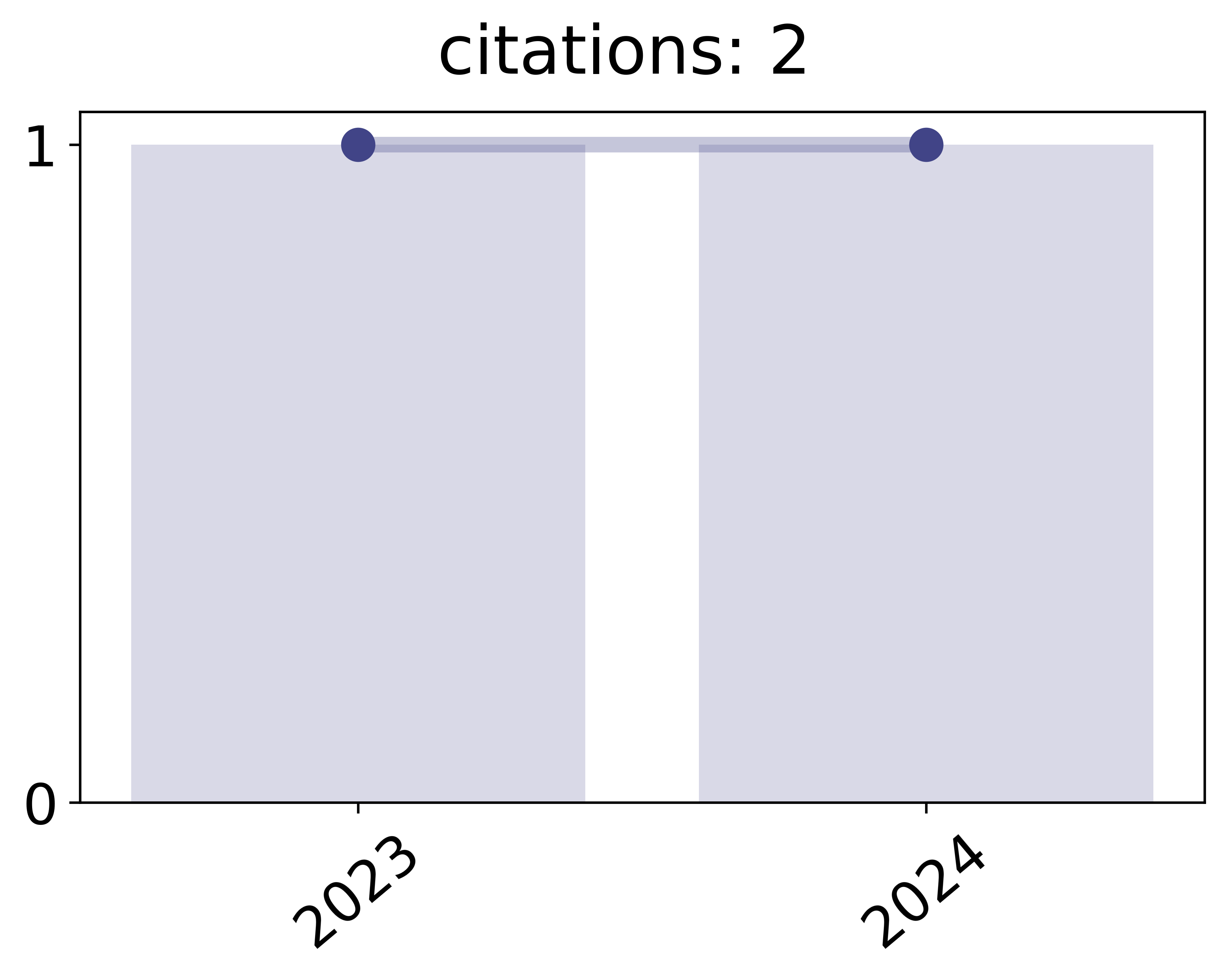

Orthogonal Gradient Boosting for Interpretable Additive Rule Ensembles Proceedings Article

In: Proceedings of the 26th International Conference on Artificial Intelligence and Statistics (AISTATS), 2024.

Abstract | Links | BibTeX | Tags: complexity, explainability, interpretability, interpretable, machine learning, rule ensemble, rule mining, XAI

@inproceedings{yang2024orthogonal,

title = {Orthogonal Gradient Boosting for Interpretable Additive Rule Ensembles},

author = {Fan Yang and Pierre Le Bodic and Michael Kamp and Mario Boley},

url = {https://michaelkamp.org/wp-content/uploads/2024/12/yang24b.pdf},

year = {2024},

date = {2024-05-02},

urldate = {2024-05-02},

booktitle = {Proceedings of the 26th International Conference on Artificial Intelligence and Statistics (AISTATS)},

abstract = {Gradient boosting of prediction rules is an efficient approach to learn potentially interpretable yet accurate probabilistic models. However, actual interpretability requires to limit the number and size of the generated rules, and existing boosting variants are not designed for this purpose. Though corrective boosting refits all rule weights in each iteration to minimise prediction risk, the included rule conditions tend to be sub-optimal, because commonly used objective functions fail to anticipate this refitting. Here, we address this issue by a new objective function that measures the angle between the risk gradient vector and the projection of the condition output vector onto the orthogonal complement of the already selected conditions. This approach correctly approximates the ideal update of adding the risk gradient itself to the model and favours the inclusion of more general and thus shorter rules. As we demonstrate using a wide range of prediction tasks, this significantly improves the comprehensibility/accuracy trade-off of the fitted ensemble. Additionally, we show how objective values for related rule conditions can be computed incrementally to avoid any substantial computational overhead of the new method.},

keywords = {complexity, explainability, interpretability, interpretable, machine learning, rule ensemble, rule mining, XAI},

pubstate = {published},

tppubtype = {inproceedings}

}

2023

Adilova, Linara; Abourayya, Amr; Li, Jianning; Dada, Amin; Petzka, Henning; Egger, Jan; Kleesiek, Jens; Kamp, Michael

FAM: Relative Flatness Aware Minimization Proceedings Article

In: Proceedings of the ICML Workshop on Topology, Algebra, and Geometry in Machine Learning (TAG-ML), 2023.

Links | BibTeX | Tags: deep learning, flatness, generalization, machine learning, relative flatness, theory of deep learning

@inproceedings{adilova2023fam,

title = {FAM: Relative Flatness Aware Minimization},

author = {Linara Adilova and Amr Abourayya and Jianning Li and Amin Dada and Henning Petzka and Jan Egger and Jens Kleesiek and Michael Kamp},

url = {https://michaelkamp.org/wp-content/uploads/2023/06/fam_regularization.pdf},

year = {2023},

date = {2023-07-22},

urldate = {2023-07-22},

booktitle = {Proceedings of the ICML Workshop on Topology, Algebra, and Geometry in Machine Learning (TAG-ML)},

keywords = {deep learning, flatness, generalization, machine learning, relative flatness, theory of deep learning},

pubstate = {published},

tppubtype = {inproceedings}

}

Michael Kamp Linara Adilova, Gennady Andrienko

Re-interpreting Rules Interpretability Journal Article

In: International Journal of Data Science and Analytics, 2023.

BibTeX | Tags: interpretable, machine learning, rule learning, XAI

@article{adilova2023reinterpreting,

title = {Re-interpreting Rules Interpretability},

author = {Linara Adilova, Michael Kamp, Gennady Andrienko, Natalia Andrienko},

year = {2023},

date = {2023-06-30},

urldate = {2023-06-30},

journal = {International Journal of Data Science and Analytics},

keywords = {interpretable, machine learning, rule learning, XAI},

pubstate = {published},

tppubtype = {article}

}

2019

Kamp, Michael

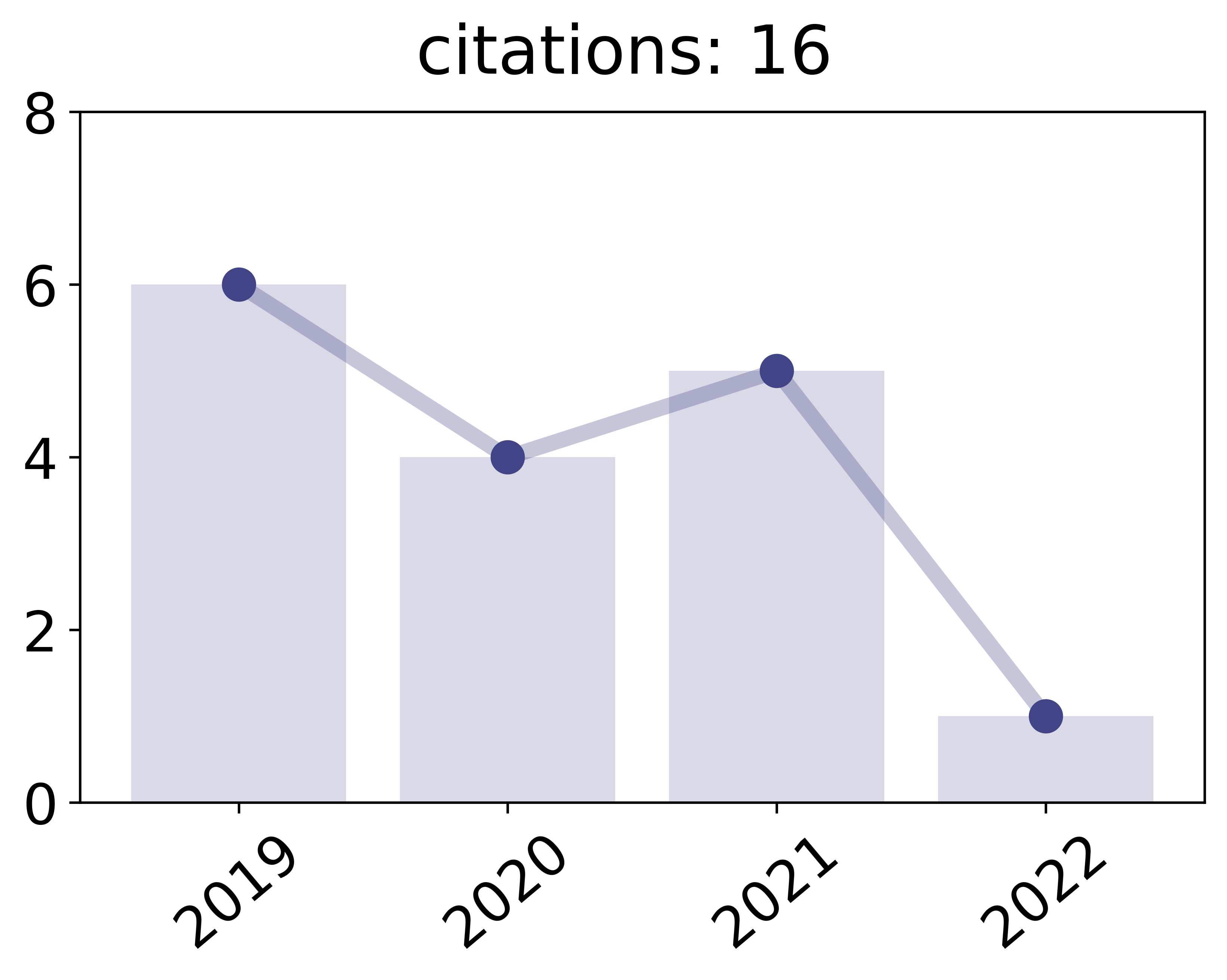

Black-Box Parallelization for Machine Learning PhD Thesis

Universitäts-und Landesbibliothek Bonn, 2019.

Abstract | Links | BibTeX | Tags: averaging, black-box, communication-efficient, convex optimization, deep learning, distributed, dynamic averaging, federated, learning theory, machine learning, parallelization, privacy, radon machine

@phdthesis{kamp2019black,

title = {Black-Box Parallelization for Machine Learning},

author = {Michael Kamp},

url = {https://d-nb.info/1200020057/34},

year = {2019},

date = {2019-01-01},

urldate = {2019-01-01},

school = {Universitäts-und Landesbibliothek Bonn},

abstract = {The landscape of machine learning applications is changing rapidly: large centralized datasets are replaced by high volume, high velocity data streams generated by a vast number of geographically distributed, loosely connected devices, such as mobile phones, smart sensors, autonomous vehicles or industrial machines. Current learning approaches centralize the data and process it in parallel in a cluster or computing center. This has three major disadvantages: (i) it does not scale well with the number of data-generating devices since their growth exceeds that of computing centers, (ii) the communication costs for centralizing the data are prohibitive in many applications, and (iii) it requires sharing potentially privacy-sensitive data. Pushing computation towards the data-generating devices alleviates these problems and allows to employ their otherwise unused computing power. However, current parallel learning approaches are designed for tightly integrated systems with low latency and high bandwidth, not for loosely connected distributed devices. Therefore, I propose a new paradigm for parallelization that treats the learning algorithm as a black box, training local models on distributed devices and aggregating them into a single strong one. Since this requires only exchanging models instead of actual data, the approach is highly scalable, communication-efficient, and privacy-preserving.

Following this paradigm, this thesis develops black-box parallelizations for two broad classes of learning algorithms. One approach can be applied to incremental learning algorithms, i.e., those that improve a model in iterations. Based on the utility of aggregations it schedules communication dynamically, adapting it to the hardness of the learning problem. In practice, this leads to a reduction in communication by orders of magnitude. It is analyzed for (i) online learning, in particular in the context of in-stream learning, which allows to guarantee optimal regret and for (ii) batch learning based on empirical risk minimization where optimal convergence can be guaranteed. The other approach is applicable to non-incremental algorithms as well. It uses a novel aggregation method based on the Radon point that allows to achieve provably high model quality with only a single aggregation. This is achieved in polylogarithmic runtime on quasi-polynomially many processors. This relates parallel machine learning to Nick’s class of parallel decision problems and is a step towards answering a fundamental open problem about the abilities and limitations of efficient parallel learning algorithms. An empirical study on real distributed systems confirms the potential of the approaches in realistic application scenarios.},

keywords = {averaging, black-box, communication-efficient, convex optimization, deep learning, distributed, dynamic averaging, federated, learning theory, machine learning, parallelization, privacy, radon machine},

pubstate = {published},

tppubtype = {phdthesis}

}

Following this paradigm, this thesis develops black-box parallelizations for two broad classes of learning algorithms. One approach can be applied to incremental learning algorithms, i.e., those that improve a model in iterations. Based on the utility of aggregations it schedules communication dynamically, adapting it to the hardness of the learning problem. In practice, this leads to a reduction in communication by orders of magnitude. It is analyzed for (i) online learning, in particular in the context of in-stream learning, which allows to guarantee optimal regret and for (ii) batch learning based on empirical risk minimization where optimal convergence can be guaranteed. The other approach is applicable to non-incremental algorithms as well. It uses a novel aggregation method based on the Radon point that allows to achieve provably high model quality with only a single aggregation. This is achieved in polylogarithmic runtime on quasi-polynomially many processors. This relates parallel machine learning to Nick’s class of parallel decision problems and is a step towards answering a fundamental open problem about the abilities and limitations of efficient parallel learning algorithms. An empirical study on real distributed systems confirms the potential of the approaches in realistic application scenarios.

2017

Gunar Ernis, Michael Kamp

Machine Learning für die smarte Produktion Journal Article

In: VDMA-Nachrichten, pp. 36-37, 2017.

Links | BibTeX | Tags: industry 4.0, machine learning, smart production

@article{kamp2017machine,

title = {Machine Learning für die smarte Produktion},

author = {Gunar Ernis, Michael Kamp},

editor = {Rebecca Pini},

url = {https://sud.vdma.org/documents/15012668/22571546/VDMA-Nachrichten%20Smart%20Data%2011-2017_1513086481204.pdf/c5767569-504e-4f64-8dba-8e7bdd06c18e},

year = {2017},

date = {2017-11-01},

issuetitle = {Smart Data - aus Daten Gold machen},

journal = {VDMA-Nachrichten},

pages = {36-37},

publisher = {Verband Deutscher Maschinen- und Anlagenbau e.V.},

keywords = {industry 4.0, machine learning, smart production},

pubstate = {published},

tppubtype = {article}

}

Kamp, Michael; Boley, Mario; Missura, Olana; Gärtner, Thomas

Effective Parallelisation for Machine Learning Proceedings Article

In: Advances in Neural Information Processing Systems, pp. 6480–6491, 2017.

Abstract | Links | BibTeX | Tags: decentralized, distributed, machine learning, parallelization, radon

@inproceedings{kamp2017effective,

title = {Effective Parallelisation for Machine Learning},

author = {Michael Kamp and Mario Boley and Olana Missura and Thomas Gärtner},

url = {http://papers.nips.cc/paper/7226-effective-parallelisation-for-machine-learning.pdf},

year = {2017},

date = {2017-01-01},

urldate = {2017-01-01},

booktitle = {Advances in Neural Information Processing Systems},

pages = {6480--6491},

abstract = {We present a novel parallelisation scheme that simplifies the adaptation of learning algorithms to growing amounts of data as well as growing needs for accurate and confident predictions in critical applications. In contrast to other parallelisation techniques, it can be applied to a broad class of learning algorithms without further mathematical derivations and without writing dedicated code, while at the same time maintaining theoretical performance guarantees. Moreover, our parallelisation scheme is able to reduce the runtime of many learning algorithms to polylogarithmic time on quasi-polynomially many processing units. This is a significant step towards a general answer to an open question on efficient parallelisation of machine learning algorithms in the sense of Nick's Class (NC). The cost of this parallelisation is in the form of a larger sample complexity. Our empirical study confirms the potential of our parallelisation scheme with fixed numbers of processors and instances in realistic application scenarios.},

keywords = {decentralized, distributed, machine learning, parallelization, radon},

pubstate = {published},

tppubtype = {inproceedings}

}